Executive Summary

Cyble Research and Intelligence Labs (CRIL) observed large-scale, systematic exposure of ChatGPT API keys across the public internet. Over 5,000 publicly accessible GitHub repositories and approximately 3,000 live production websites were found leaking API keys through hardcoded source code and client-side JavaScript.

GitHub has emerged as a key discovery surface, with API keys frequently committed directly into source files or stored in configuration and .env files. The risk is further amplified by public-facing websites that embed active keys in front-end assets, leading to persistent, long-term exposure in production environments.

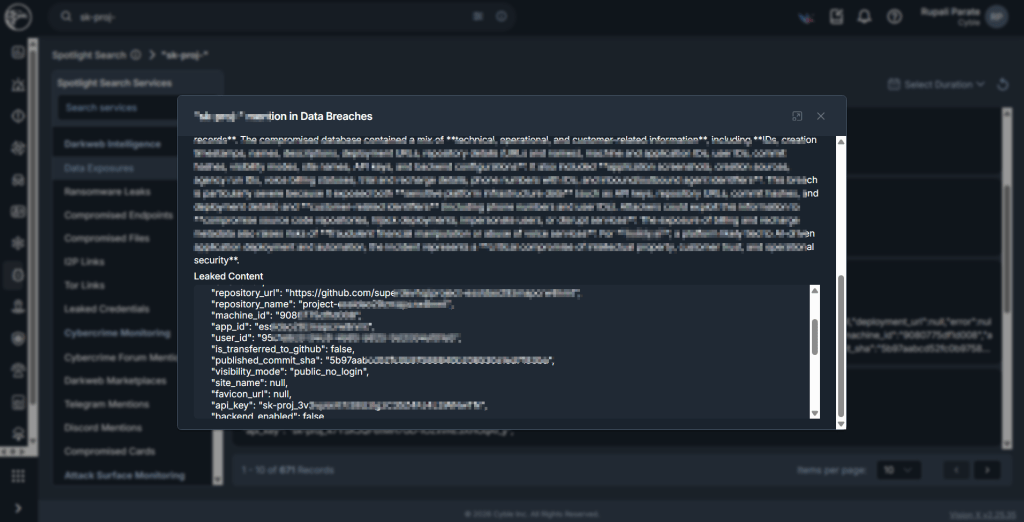

CRIL’s investigation further revealed that several exposed API keys were referenced in discussions mentioning the Cyble Vision platform. The exposure of these credentials significantly lowers the barrier for threat actors, enabling faster downstream abuse and facilitating broader criminal exploitation.

These findings underscore a critical security gap in the AI adoption lifecycle. AI credentials must be treated as production secrets and protected with the same rigor as cloud and identity credentials to prevent ongoing financial, operational, and reputational risk.

Key Takeaways

- GitHub is a primary vector for the discovery of exposed ChatGPT API keys.

- Public websites and repositories form a continuous exposure loop for AI secrets.

- Attackers can use automated scanners and GitHub search operators to harvest keys at scale.

- Exposed AI keys are monetized through inference abuse, resale, and downstream criminal activity.

- Most organizations lack monitoring for AI credential misuse.

AI API keys are production secrets, not developer conveniences. Treating them casually is creating a new class of silent, high-impact breaches.

Richard Sands, CISO, Cyble

Overview, Analysis, and Insights

“The AI Era Has Arrived — Security Discipline Has Not”

We are firmly in the AI era. From chatbots and copilots to recommendation engines and automated workflows, artificial intelligence is no longer experimental. It is production-grade infrastructure with end-to-end workflows and pipelines. Modern websites and applications increasingly rely on large language models (LLMs), token-based APIs, and real-time inference to deliver capabilities that were unthinkable just a few years ago.

This rapid adoption has also given rise to a development culture often referred to as “vibe coding.” Developers, startups, and even enterprises are prioritizing speed, experimentation, and feature delivery over foundational security practices. While this approach accelerates innovation, it also introduces systemic weaknesses that attackers are quick to exploit.

One of the most prevalent and most dangerous of these weaknesses is the widespread exposure of hardcoded AI API keys across both source code repositories and production websites.

A rapidly expanding digital risk surface is likely to increase the likelihood of compromise; a preventive strategy is the best approach to avoid it. Cyble Vision provides users with insight into exposures across the surface, deep, and dark web, generating real-time alerts for them to view and take action.

SOC teams will be able to leverage this data to remediate compromised credentials and their associated endpoints. With Threat Actors potentially weaponizing these credentials to carry out malicious activities (which will then be attributed to the affected user(s)), proactive intelligence is paramount to keeping one’s digital risk surface secure.

“Tokens are the new passwords — they are being mishandled.”

AI platforms use token-based authentication. API keys act as high-value secrets that grant access to inference capabilities, billing accounts, usage quotas, and, in some cases, sensitive prompts or application behavior. From a security standpoint, these keys are equivalent to privileged credentials.

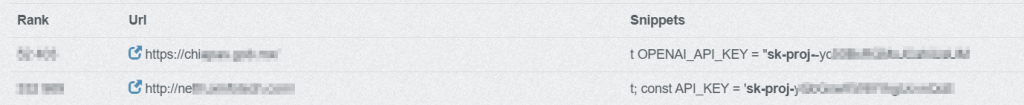

Despite this, ChatGPT API keys are frequently embedded directly in JavaScript files, front-end frameworks, static assets, and configuration files accessible to end users. In many cases, keys are visible through browser developer tools, minified bundles, or publicly indexed source code. An example of the keys hardcoded in popular reputable websites is shown below (see Figure 1)

This reflects a fundamental misunderstanding: API keys are being treated as configuration values rather than as secrets. In the AI era, that assumption is dangerously outdated. In some cases, this happens unintentionally, while in others, it’s a deliberate trade-off that prioritizes speed and convenience over security.

When API keys are exposed publicly, attackers do not need to compromise infrastructure or exploit vulnerabilities. They simply collect and reuse what is already available.

CRIL has identified multiple publicly accessible websites and GitHub Repositories containing hardcoded ChatGPT API keys embedded directly within client-side code. These keys are exposed to any user who inspects network requests or application source files.

A commonly observed pattern resembles the following:

```javascriptconst OPENAI_API_KEY = "sk-proj-XXXXXXXXXXXXXXXXXXXXXXXX";``````javascriptconst OPENAI_API_KEY = "sk-svcacct-XXXXXXXXXXXXXXXXXXXXXXXX";```The prefix “sk-proj-“ typically represents a project-scoped secret key associated with a specific project environment, inheriting its usage limits and billing configuration. The “sk-svcacct-“ prefix generally denotes a service account–based key intended for automated backend services or system integrations.

Regardless of type, both keys function as privileged authentication tokens that enable direct access to AI inference services and billing resources. When embedded in client-side code, they are fully exposed and can be immediately harvested and misused by threat actors.

GitHub as a High-Fidelity Source of AI Secrets

Public GitHub repositories have emerged as one of the most reliable discovery surfaces for exposed ChatGPT API keys. During development, testing, and rapid prototyping, developers frequently hardcode OpenAI credentials into source code, configuration files, or .env files—often with the intent to remove or rotate them later. In practice, these secrets persist in commit history, forks, and archived repositories.

CRIL analysis identified over 5,000 GitHub repositories containing hardcoded OpenAI API keys. These exposures span JavaScript applications, Python scripts, CI/CD pipelines, and infrastructure configuration files. In many cases, the repositories were actively maintained or recently updated, increasing the likelihood that the exposed keys were still valid at the time of discovery.

Notably, the majority of exposed keys were configured to access widely used ChatGPT models, making them particularly attractive for abuse. These models are commonly integrated into production workflows, increasing both their exposure rate and their value to threat actors.

Once committed to GitHub, API keys can be rapidly indexed by automated scanners that monitor new commits and repository updates in near real time. This significantly reduces the window between exposure and exploitation, often to hours or even minutes.

Public Websites: Persistent Exposure in Production Environments

Beyond source code repositories, CRIL observed widespread exposure of ChatGPT API keys directly within production websites. In these cases, API keys were embedded in client-side JavaScript bundles, static assets, or front-end framework files, making them accessible to any user inspecting the application.

CRIL identified approximately 3,000 public-facing websites exposing ChatGPT API keys in this manner. Unlike repository leaks, which may be removed or made private, website-based exposures often persist for extended periods, continuously leaking secrets to both human users and automated scrapers.

These implementations frequently invoke ChatGPT APIs directly from the browser, bypassing backend mediation entirely. As a result, exposed keys are not only visible but actively used in real time, making them trivial to harvest and immediately abuse.

As with GitHub exposures, the most referenced models were highly prevalent ChatGPT variants used for general-purpose inference, indicating that these keys were tied to live, customer-facing functionality rather than isolated testing environments. These models strike a balance between capability and cost, making them ideal for high-volume abuse such as phishing content generation, scam scripts, and automation at scale.

Hard-coding LLM API keys risks turning innovation into liability, as attackers can drain AI budgets, poison workflows, and access sensitive prompts and outputs. Enterprises must manage secrets and monitor exposure across code and pipelines to prevent misconfigurations from becoming financial, privacy, or compliance issues.

Kautubh Medhe, CPO, Cyble

From Exposure to Exploitation: How Attackers Monetize AI Keys

Threat actors continuously monitor public websites, GitHub repositories, forks, gists, and exposed JavaScript bundles to identify high-value secrets, including OpenAI API keys. Once discovered, these keys are rapidly validated through automated scripts and immediately operationalized for malicious use.

Compromised keys are typically abused to:

- Execute high-volume inference workloads

- Generate phishing emails, scam scripts, and social engineering content

- Support malware development and lure creation

- Circumvent usage quotas and service restrictions

- Drain victim billing accounts and exhaust API credits

In certain cases, CRIL, using Cyble Vision, also identified several of these keys that originated from exposures and were subsequently leaked, as noted in our spotlight mentions. (see Figure 2 and Figure 3)

Unlike traditional conventions, AI API activity is often not integrated into centralized logging, SIEM monitoring, or anomaly detection frameworks. As a result, malicious usage can persist undetected until organizations encounter billing spikes, quota exhaustion, degraded service performance, or operational disruptions.

Conclusion

The exposure of ChatGPT API keys across thousands of websites and tens of thousands of GitHub repositories highlights a systemic security blind spot in the AI adoption lifecycle. These credentials are actively harvested, rapidly abused, and difficult to trace once compromised.

As AI becomes embedded in business-critical workflows, organizations must abandon the perception that AI integrations are experimental or low risk. AI credentials are production secrets and must be protected accordingly.

Failure to secure them will continue to expose organizations to financial loss, operational disruption, and reputational damage.

SOC teams should take the initiative to proactively monitor for exposed endpoints using monitoring tools such as Cyble Vision, which provides users with real-time alerts and visibility into compromised endpoints.

This, in turn, allows them to take corrective action to identify which endpoints and credentials were compromised and secure any compromised endpoints as soon as possible.

Our Recommendations

Eliminate Secrets from Client-Side Code

AI API keys must never be embedded in JavaScript or front-end assets. All AI interactions should be routed through secure backend services.

Enforce GitHub Hygiene and Secret Scanning

- Prevent commits containing secrets through pre-commit hooks and CI/CD enforcement

- Continuously scan repositories, forks, and gists for leaked keys

- Assume exposure once a key appears in a public repository and rotate immediately

- Maintain a complete inventory of all repositories associated with the organization, including shadow IT projects, archived repositories, personal developer forks, test environments, and proof-of-concept code

- Enable automated secret scanning and push protection at the organization level

Apply Least Privilege and Usage Controls

- Restrict API keys by project scope and environment (separate dev, test, prod)

- Apply IP allowlisting where possible

- Enforce usage quotas and hard spending limits

- Rotate keys frequently and revoke any exposed credentials immediately

- Avoid sharing keys across teams or applications

Implement Secure Key Management Practices

- Store API keys in secure secret management systems

- Avoid storing keys in plaintext configuration files

- Use environment variables securely and restrict access permissions

- Do not log API keys in application logs, error messages, or debugging outputs

- Ensure keys are excluded from backups, crash dumps, and telemetry exports

Monitor AI Usage Like Cloud Infrastructure

Establish baselines for normal AI API usage and alert on anomalies such as spikes, unusual geographies, or unexpected model usage.